We are living in a time of unprecedented digital expansion. Every transaction, interaction, and operation generates data, and for many organizations, this data is becoming difficult to manage. Traditional storage infrastructure, often built on rigid hierarchies and limited hardware controllers, is struggling to keep pace with the deluge of unstructured data—videos, images, backups, and logs. As these legacy systems reach their breaking points, IT leaders are looking for a solution that combines the massive scalability of the cloud with the control and performance of on-premises infrastructure. This search has led to the rise of Object Storage Appliances, purpose-built hardware solutions designed to store, protect, and manage data at a petabyte scale and beyond.

Redefining Data Center Hardware

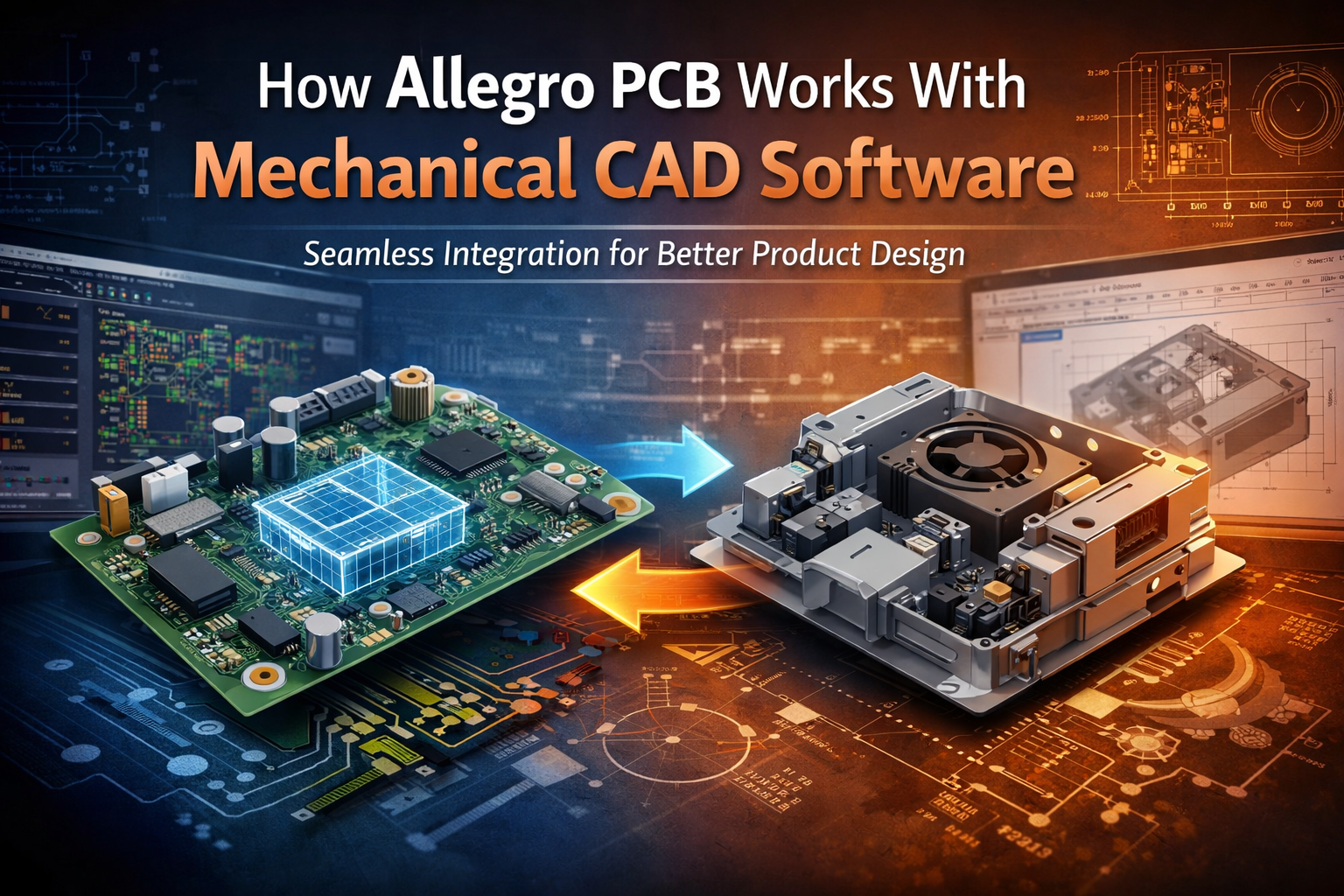

For decades, the standard approach to adding storage capacity was simple: buy a bigger box. This “scale-up” architecture, typical of Network Attached Storage (NAS) and Storage Area Networks (SAN), involves adding more hard drives to a central controller. Eventually, however, that controller becomes a bottleneck. It can only manage so many drives and process so many requests before performance nose-dives.

The modern approach flips this script. Instead of a single brain trying to manage a mountain of storage, we now use a clustered architecture.

The “Node” Concept

In this modern architecture, storage is purchased in “nodes.” A node is essentially a server that contains both computing power (CPU/RAM) and storage capacity (disk drives). When you combine these nodes, they act as a single, unified system.

This is where the magic happens. Because each node brings its own processing power to the cluster, the system gets smarter and faster as it gets bigger. If you need more capacity, you simply plug in another appliance. The cluster automatically detects the new hardware, rebalances the data, and expands the available pool of storage. There is no “forklift upgrade” where you have to rip out old hardware to replace it with new. This modularity is the heart of why these systems are taking over the data center.

The Mechanics of Limitless Scale

The primary value proposition of this technology is its ability to grow without friction. In a traditional file system, managing a billion files is a nightmare. The system has to track the location of every single file within a complex tree of folders. As the file count grows, the database tracking these files becomes heavy and slow, leading to long wait times for users and applications.

These modern appliances use a flat address space. Data isn’t stuffed into folders; it’s given a unique identifier and placed in a massive, flat pool. This eliminates the overhead of scanning through folder trees. Whether you have ten thousand files or ten billion, the time it takes to retrieve a piece of data remains virtually the same.

Seamless Expansion

When an organization deploys object storage appliances, they are effectively future-proofing their infrastructure. Consider a scenario where a media company creates 500 terabytes of new video footage a year. With a traditional array, they might have to buy a system that is mostly empty on day one just to handle the growth expected in year three. This ties up capital in unused hardware.

With a scale-out appliance model, they can buy exactly what they need for this year. When next year arrives and capacity runs low, they simply add another node. The system scales linearly. This “pay-as-you-grow” model aligns IT spending with actual business needs, preventing the waste of over-provisioning.

Efficiency Beyond Capacity: The Erasure Coding Advantage

One of the most critical, yet often overlooked, aspects of storage hardware is how it protects data from disk failure. Traditional systems use RAID (Redundant Array of Independent Disks). RAID protects data by creating copies or using parity drives. While effective for smaller systems, RAID becomes risky and inefficient at the petabyte scale. Rebuilding a failed high-capacity drive in a RAID array can take days, leaving the system vulnerable to a second failure that could cause total data loss.

Modern storage hardware utilizes a superior method called Erasure Coding (EC).

How Erasure Coding Works

Imagine taking a file, breaking it into 12 distinct pieces, and then creating 4 additional “parity” pieces that contain mathematical formulas to rebuild the data. You then scatter these 16 total pieces across 16 different drives in different nodes.

If a drive fails—or even if an entire node goes offline—the system can instantly reconstruct the missing data from the remaining pieces. It doesn’t need to rebuild a whole drive; it just reads the necessary fragments from across the cluster.

- Higher Durability: EC can survive multiple simultaneous failures without data loss.

- Better Efficiency: RAID often eats up 50% of your raw storage capacity for protection (mirroring). Erasure coding can provide higher durability while only using 20% to 30% of your capacity for overhead. This means you get more usable storage for every dollar spent on hardware.

Security and Sovereignty in Your Control

While the public cloud offers vast scalability, it introduces concerns regarding data sovereignty, latency, and unpredictable costs. For highly regulated industries like healthcare, finance, and government, knowing exactly where data resides is not optional—it is a legal requirement.

Deploying on-premises hardware solves this dilemma. You gain the “infinite” feel of cloud storage, but the physical disks are sitting in your own secure rack, behind your own firewalls, subject to your own security policies.

The Ransomware Shield

Perhaps the most compelling security feature available on these systems is “immutability.” This feature allows administrators to lock specific data objects for a set period. During this time, the data cannot be modified or deleted by anyone—not even by a user with administrative privileges.

If a ransomware attack breaches your network and attempts to encrypt your backups or archives, the storage system will simply reject the request. The hardware enforces a “read-only” state. This creates an indestructible bunker for your critical data, ensuring that you can always recover without paying a ransom.

Key Use Cases for Dedicated Storage Hardware

This technology is versatile, finding a home in various sectors that generate heavy data loads.

1. The Modern Backup Target

The backup landscape has changed. Tape is too slow for rapid recovery, and standard disk arrays are too expensive for long-term retention. Object storage appliances sit in the “Goldilocks” zone. They are fast enough to restore operations quickly (providing a low Recovery Time Objective) but cheap enough to store years of backups. Their compatibility with modern backup software makes them a plug-and-play solution for instant ransomware protection.

2. Surveillance and CCTV

Security cameras are moving to 4K resolution, and retention policies are extending from weeks to months. This creates a tsunami of video data. A clustered storage system can ingest these multiple high-bandwidth streams without dropping frames, while simultaneously allowing security teams to search and view archived footage.

3. Medical Imaging (PACS/VNA)

Hospitals generate massive amounts of imaging data (X-rays, MRIs, CT scans). These images must be kept for decades and retrieved instantly by doctors. The metadata capabilities of object storage allow these images to be tagged with patient IDs and study dates, making the storage system itself an intelligent, searchable archive that integrates seamlessly with hospital viewing applications.

4. Artificial Intelligence Training

Training AI models requires feeding massive datasets into GPU clusters. The high throughput of local storage hardware ensures that the expensive GPUs are not sitting idle waiting for data to arrive from a remote cloud server. Keeping the data local accelerates the training pipeline and reduces the time-to-insight.

Conclusion

The era of relying on rigid, monolithic storage arrays to handle the dynamic needs of modern business is ending. As data becomes the most valuable asset an organization owns, the infrastructure used to house it must be resilient, scalable, and efficient.

Moving to a dedicated appliance model offers a strategic advantage. It allows organizations to break free from the cycle of forklift upgrades and data migrations. It provides a secure, sovereign environment that protects against modern cyber threats while delivering the performance required for next-generation applications. By treating storage not just as a locker for files, but as an intelligent, scalable platform, businesses position themselves to turn their data growth from a liability into a competitive asset.

FAQs

1. How does an object storage appliance differ from a standard server with disks?

While you can install storage software on a standard server, a dedicated appliance is tuned for performance and reliability. The hardware components (CPU, networking, disk controllers) are optimized specifically for the storage workload. Furthermore, appliances usually come with integrated support for both hardware and software from a single vendor, simplifying maintenance and troubleshooting compared to a “build-it-yourself” approach.

2. Can I mix different sizes of nodes in the same cluster?

In most modern systems, yes. This is a significant advantage over legacy arrays. You might start with 10TB nodes today, and three years from now, when 20TB nodes are the standard, you can add them to the same cluster. The system handles the heterogeneity, allowing you to maximize the lifespan of your older hardware while taking advantage of newer, denser technology.

3. Is this solution only for “cold” or archive data?

Historically, yes, but that has changed. With the advent of all-flash object storage appliances, these systems are now fast enough for high-performance workloads like analytics, AI training, and even serving content for high-traffic websites. They cover the spectrum from “hot” active data to “cold” deep archives.

4. What happens if a drive fails in an appliance?

The system is self-healing. When a drive fails, the appliance alerts administrators (often via a “call home” feature). In the meantime, the system automatically begins rebuilding the data that was on the failed drive using the parity fragments stored on other drives in the cluster. This background process ensures data integrity remains high without requiring immediate emergency intervention.

5. How difficult is the data migration from my old NAS to an object appliance?

Migration tools have become very sophisticated. Many appliances include or support “file gateway” functionality. This allows you to copy data from your old NAS using standard protocols (like SMB or NFS). Once the data is on the appliance, it is converted into objects. There are also automated software tools that can scan your old storage, identify files based on policy (e.g., “older than 6 months”), and seamlessly move them to the new appliance.

Leave a Reply