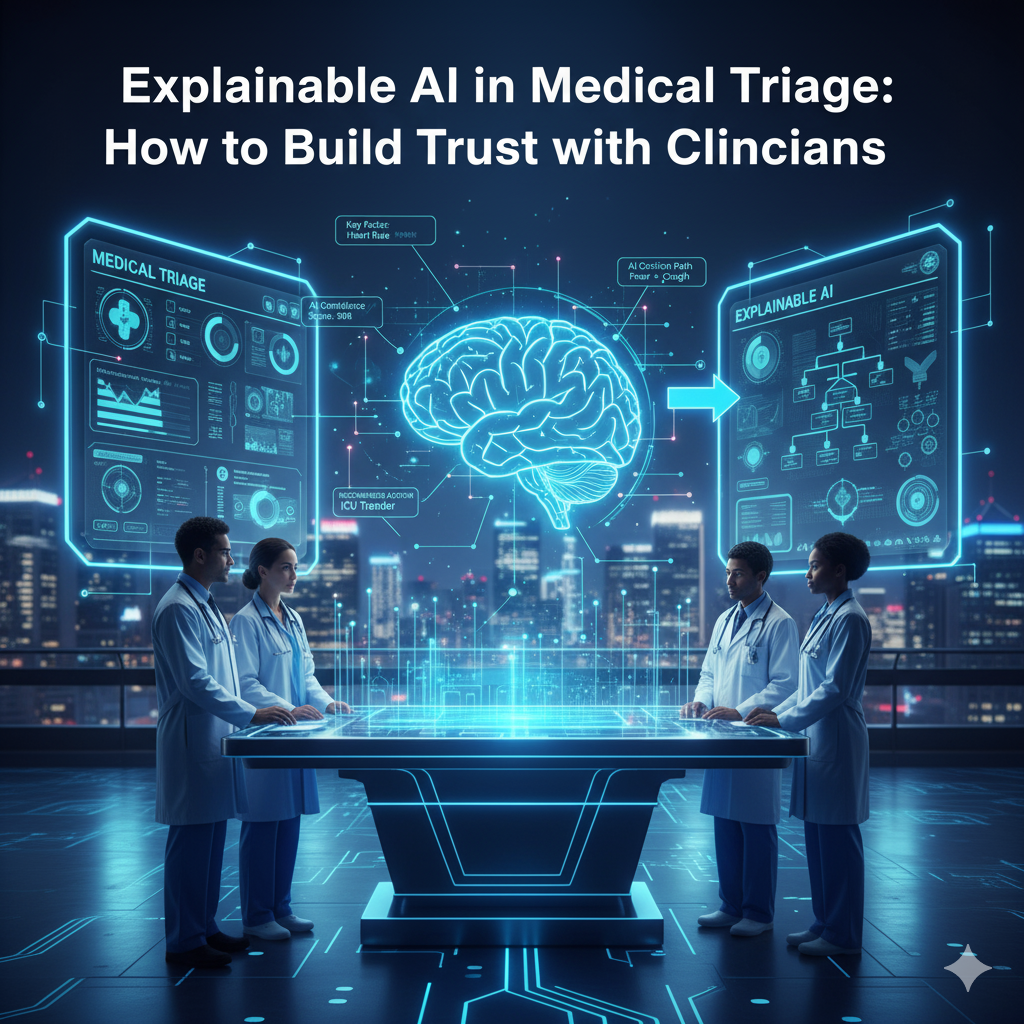

Artificial Intelligence (AI) is rapidly transforming healthcare — from early diagnostics to personalized treatment recommendations. Among its most impactful applications is AI-powered triage, which helps prioritize patient care based on urgency, symptoms, and risk. Yet, despite its potential, many clinicians hesitate to rely fully on AI systems.

The reason? Lack of explainability.

When healthcare professionals cannot understand how or why an AI model makes a certain decision, trust becomes the biggest barrier. This is where Explainable AI (XAI) comes into play. It bridges the gap between complex algorithms and human reasoning, helping doctors and nurses trust AI triage systems in real clinical environments.

In this article, we’ll explore how explainable AI can transform medical triage systems, the challenges in building clinician trust, and how an AI app development company leveraging generative AI development can design interpretable, compliant, and scalable triage solutions.

What Is Explainable AI in Healthcare?

Explainable AI (XAI) refers to systems that can make their decision-making process understandable to humans. In traditional black-box AI models—such as deep neural networks—decisions are based on millions of parameters, making it almost impossible to trace the logic behind each output.

For example:

When an AI triage system flags a patient as “high risk” based on their symptoms and vitals, the clinician must understand why the system reached that conclusion—whether it was due to abnormal heart rate, medical history, or a combination of both.

Without explainability, doctors face ethical and legal risks if they act on AI suggestions without a clear rationale.

Explainable AI helps bridge this trust gap by:

- Providing transparent insights into decision factors

- Highlighting key data points influencing recommendations

- Allowing clinicians to validate and override decisions

The Role of AI in Medical Triage Systems

AI triage systems analyze patient data—including symptoms, vital signs, lab results, and even EHR notes—to prioritize treatment. These systems can:

- Identify critical cases faster

- Reduce emergency department (ED) crowding

- Support resource allocation

- Enable early intervention for high-risk patients

However, while the accuracy of AI triage models has improved significantly, interpretability remains the missing piece.

A highly accurate model is valuable, but if clinicians can’t explain its reasoning to patients or auditors, it risks being sidelined — no matter how smart it is.

Why Explainability Matters in Medical Triage

1. Clinician Trust

Healthcare professionals are trained to base decisions on evidence and clinical reasoning. A black-box AI that outputs “admit this patient urgently” without justification can create skepticism and resistance.

Explainability allows clinicians to see which symptoms, test results, or trends led to the AI’s recommendation — building confidence in its use.

2. Legal and Ethical Accountability

AI triage systems influence patient care decisions. Hence, hospitals and developers must ensure their algorithms are compliant with medical ethics and legal frameworks like HIPAA, GDPR, and FDA AI/ML guidelines. Explainable models make it easier to audit decision pathways and demonstrate compliance.

3. Patient Communication

When clinicians can interpret AI decisions, they can better explain treatment priorities to patients and families. This improves transparency and patient satisfaction, especially in critical care scenarios.

4. Continuous Learning

Explainability helps medical researchers and developers identify model weaknesses, bias, and drift. With visibility into the “why,” teams can fine-tune models continuously for better accuracy.

Challenges of Explainable AI in Healthcare

While explainability sounds ideal, implementing it in high-stakes healthcare environments poses challenges:

- Complexity vs. Interpretability:

Simplifying models for interpretability often reduces performance. Finding the right balance between accuracy and transparency is crucial. - Data Bias:

Historical data may reflect demographic, socioeconomic, or regional biases. Even explainable AI must handle such biases responsibly. - Integration with Clinical Workflows:

AI explanations should be presented in intuitive, clinician-friendly formats—within EHR dashboards or triage software interfaces. - Lack of Standardization:

There’s no universal framework defining what “explainable” means in medical AI, making regulatory compliance a moving target.

This is where AI app development companies specializing in healthcare step in—combining domain expertise with generative AI development frameworks to create interpretable, trustworthy solutions.

How an AI App Development Company Builds Explainable Triage Systems

To bridge the gap between AI performance and clinician trust, leading AI app development companies employ a multi-layered approach:

1. Data Transparency

Developers ensure that training datasets are clearly documented, detailing their sources, demographics, and preprocessing methods. This helps clinicians understand the context behind AI predictions.

2. Model Interpretability Tools

Tools like LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) are integrated into triage models to show feature importance — e.g., “chest pain” and “oxygen level” contributed 60% to the decision score.

3. Generative AI for Scenario Simulation

Through generative AI development, models can simulate “what-if” scenarios — showing how different symptom combinations affect outcomes. This allows clinicians to test model reasoning dynamically.

For example:

A generative AI-based triage assistant can explain,

“If the patient’s oxygen saturation dropped below 90%, the model’s urgency score would increase by 40%.”

4. Human-in-the-Loop (HITL) Design

Clinicians remain part of the feedback loop, validating model predictions and adjusting logic when necessary. This ensures real-world relevance and continuous learning.

5. Explainable Dashboards

Modern triage apps present AI reasoning through interactive dashboards — visualizing decision factors like symptom severity, vital sign deviations, and prior patient outcomes. This makes interpretation seamless during emergencies.

Generative AI’s Role in Explainable Triage Systems

Generative AI isn’t just about creating content—it’s also reshaping how AI models explain themselves.

By integrating generative AI development frameworks, developers can design systems that:

- Generate human-readable explanations for AI outputs

- Translate numerical data into narrative summaries (“Patient is at high risk due to elevated pulse, low oxygen, and history of COPD.”)

- Create synthetic but realistic datasets for safe model training and testing

- Enhance dialogue-based triage assistants that communicate reasoning in natural language

For instance, a generative AI-based triage chatbot can not only assess a patient’s condition but also explain why it reached a certain risk level — improving transparency and trust in digital triage tools.

Benefits of Explainable AI in Triage for Healthcare Providers

- Improved Clinical Decision-Making

Clinicians can validate AI recommendations and combine them with their expertise for more accurate decisions. - Faster Emergency Response

When doctors understand model reasoning, they can act faster without second-guessing AI outcomes. - Reduced Bias and Errors

Transparent models allow teams to identify unfair predictions (e.g., bias toward specific demographics). - Regulatory Compliance

Explainability supports compliance with emerging global healthcare AI regulations. - Enhanced Collaboration

Doctors, nurses, and data scientists can collaborate effectively when the model’s reasoning is visible and interpretable.

Case Example: Explainable AI in Real-World Triage

A leading hospital network partnered with an AI app development company to build a triage assistant for emergency departments.

The Challenge:

The hospital’s previous AI system accurately flagged high-risk patients but provided no reasoning. Clinicians were reluctant to use it due to accountability concerns.

The Solution:

The development team integrated explainable AI layers with generative AI modules that translated model outputs into narrative summaries.

Example Output:

“Patient classified as critical due to chest tightness, heart rate >120 bpm, and prior cardiac history. Similar cases show 85% chance of needing immediate attention.”

Results:

- Clinician trust increased by 40% (based on internal surveys)

- Emergency response time reduced by 18%

- Adoption rate of AI triage tools doubled in 6 months

This showcases how generative AI development can bring both interpretability and efficiency to medical triage.

Future of Explainable AI in Healthcare

In the coming years, explainable AI will become a regulatory requirement rather than an optional feature. Emerging standards from bodies like the FDA and EU AI Act emphasize traceability, accountability, and human oversight in clinical AI.

The next wave of innovation will include:

- Conversational explainable AI agents for real-time clinical assistance

- Multi-modal reasoning combining voice, imaging, and sensor data

- Self-explaining generative AI models that can auto-justify their recommendations

Healthcare providers that partner with forward-looking AI app development companies will gain a competitive edge in both compliance and care quality.

Conclusion

Explainable AI is no longer a technical luxury—it’s a clinical necessity. In medical triage, where every second counts, trust is as vital as accuracy.

By integrating MLOps best practices, generative AI development, and explainable model design, AI solutions can achieve the perfect balance of performance and transparency.

Partnering with an experienced AI app development company ensures that your triage systems are not only powerful but also interpretable, ethical, and aligned with real-world clinical needs.

Final Thought

AI can assist, but it cannot replace human judgment. With explainability at its core, medical AI can finally achieve what it promises — a partnership where clinicians and algorithms work together to save lives.

Leave a Reply